Extracting workers from Rails

Experimenting with Sidekiq workers outside of a rails app within a Docker environment.

Sidekiq has always been my go-to gem as soon as I need some sort of background processing in a Rails app. But one thing that always felt weird to me with background workers is having the web and the workers instances share the same code. If what I want is to process some jobs asynchronously, does my worker instance really need to load my entire Rails app?

As an experiment, I decided to have my rails API post a JSON payload describing a given job straight to Redis. A separate app containing the worker logic then pulls that payload from Redis in order to process the job.

The following is a walk through of that experiment, using Docker for local development. If you prefer to browse the code directly, I've uploaded it to GitHub. And if you prefer to skip to the conclusion of this experiment, feel free to scroll to the bottom.

Setup

At the root, let's start by creating a docker-compose.yml

version: "3"

services:

api:

build: ./api

depends_on:

- db

- sidekiq

ports:

- "3000:3000"

volumes:

- ./api:/api

- api_bundle:/usr/local/bundle

db:

image: postgres:alpine

environment:

POSTGRES_PASSWORD: postgres

ports:

- "5432:5432"

redis:

image: redis:alpine

ports:

- "6379:6379"

sidekiq:

build: ./sidekiq

depends_on:

- redis

volumes:

- ./sidekiq:/sidekiq

- sidekiq_bundle:/usr/local/bundle

volumes:

api_bundle:

sidekiq_bundle:This way we can spin up the different apps together and make sure they can communicate with one another.

Now let's configure a basic worker.

The worker

We can create an app to pull the jobs from Redis in ./sidekiq by just

creating a Gemfile in ./sidekiq/Gemfile

# frozen_string_literal: true

source 'https://rubygems.org'

git_source(:github) { |repo_name| "https://github.com/#{repo_name}" }

ruby '2.5.1'

gem 'sidekiq'Then we configure our docker image by creating a file in ./sidekiq/Dockerfile

FROM ruby:2.5-alpine

WORKDIR /sidekiq

COPY Gemfile* ./

RUN apk update \

&& apk upgrade \

&& bundle install

RUN addgroup -g 1000 -S sidekiq \

&& adduser -u 1000 -S sidekiq -G sidekiq \

&& chown -R sidekiq:sidekiq /sidekiq

USER sidekiq

CMD ["bundle", "exec", "sidekiq", "-r", "./worker.rb"]And finally we need to create our ./sidekiq/worker.rb file that will

contain the actual worker logic.

require 'sidekiq'

Sidekiq.configure_server do |config|

config.redis = { url: 'redis://redis:6379' }

end

class MyWorker

include Sidekiq::Worker

def perform(complexity)

case complexity

when 'super hard'

sleep 10

puts 'This took a while!'

when 'hard'

sleep 5

puts 'Not too bad'

else

sleep 1

puts 'Piece of cake'

end

end

endOne thing to notice here is that we only need to configure the Sidekiq's server to process jobs, no client configuration needed.

With this basic worker done, we can now focus on setting up our Rails API.

The Rails API

First we create our app with rails new api -d postgresql --skip-bundle --api.

Then we can add the redis gem to our ./api/Gemfile

gem 'redis'For our container image we create the following ./api/Dockerfile

FROM ruby:2.5-alpine

WORKDIR /api

COPY Gemfile* ./

RUN apk update \

&& apk upgrade \

&& apk add --no-cache postgresql-dev tzdata\

&& apk add --virtual .build-dependencies g++ make libffi-dev libxml2-dev zlib-dev libxslt-dev \

&& bundle install \

&& apk del .build-dependencies

RUN addgroup -g 1000 -S api \

&& adduser -u 1000 -S api -G api \

&& chown api:api /api

USER api

EXPOSE 3000

CMD ["bundle", "exec", "rails", "s"]Our Rails app will need to know how to access the database service we specified

in our docker-compose file. The simplest way to do this is to edit

./api/config/database.yml

default: &default

adapter: postgresql

encoding: unicode

pool: <%= ENV.fetch("RAILS_MAX_THREADS") { 5 } %>

url: 'postgres://postgres:postgres@db:5432'Now for actually sending the data to Sidekiq, I like to create a specific

gateway class to encapsulate that logic. We can create the following file

./api/lib/my_app/gateway/sidekiq.rb

require 'json'

require 'redis'

require 'securerandom'

module MyApp

module Gateway

class Sidekiq

def initialize(redis: Redis)

@redis = redis.new(url: 'redis://redis:6379')

end

def do_later(klass:, args:)

message = create_message(klass: klass, args: args)

@redis.lpush('queue:default', JSON.dump(message))

end

private

def create_message(klass:, args:)

{

'class' => klass,

'queue' => 'default',

'args' => args,

'jid' => SecureRandom.hex(12),

'retry' => true,

'created_at' => Time.now.to_f,

'enqueued_at' => Time.now.to_f

}

end

end

end

endBasically to use this, we instantiate the gateway class, then

call do_later on the instance passing the name of the worker class (in our case this

will be MyWorker) and an array of arguments (in our case ['hard'] will

do).

Adding an endpoint

For us to play with this, let's set up an API endpoint where we can post data to

trigger the background job. First we create a route in ./api/config/routes.rb

Rails.application.routes.draw do

post '/process', to: 'process#create'

endThen we create the matching controller in

./api/app/controllers/process_controller.rb

require 'my_app/gateway/sidekiq'

class ProcessController < ApplicationController

def create

job = MyApp::Gateway::Sidekiq.new

job.do_later(

klass: 'MyWorker',

args: [params['complexity']]

)

end

endThis way we send the complexity param as the single argument to the background

job.

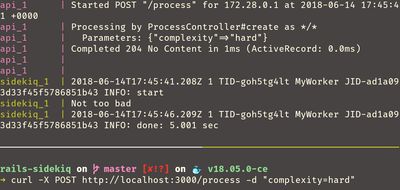

Live Action

OK time to see it in action. Let's build our container images and start our services. In the terminal:

docker-compose builddocker-compose run --rm api rails db:setup db:migratedocker-compose up

In a separate terminal window we can send a post request:

curl -X POST http://localhost:3000/process -d "complexity=hard"

Takeaway

On one hand, I'm quite happy with the separation of responsibility here. If we wanted to use Sidekiq but with a different API that was not even written in Ruby, this setup would work. Conversely, if we needed to change our background processing engine, the only change required on our API would be to swap our gateway for a new one.

On the other hand, having one app know the class names of another one still sounds like too much coupling.

More information can be found on Sidekiq's wiki if you want to push jobs to it without Ruby or if you want more information on the job format. Also, you can check out Faktory (by the same creator of Sidekiq) as it has clients written in several languages.

Did you try something similar in a past project? Were there any pain points with this approach? Let me know in the comments ;)